I just upgraded from a 5800X to a 5800X3D (with 3080 Ti). Indeed, I get a solid fps improvement in airports. Probably from 9-15 fps. Heathrow with full AIG is still jerky, but less so. For airports where I would get 45 fps before, now it’s more like 60, definitely very noticeable.

I set the quality to Ultra but turn down the detail to 100, then there is no loading when quickly panning around and just smooth flying at over 60 fps when outside busy airports. I find that at LOD 200 there is still some jerkiness when looking around (I use a Tobii eye tracker so there’s a lot of side to side movements which is taxing on the system).

1 Like

I am in a fix whether to upgrade from my 5900x to a 5800x3d. I only want to run at 60 fps with VSync ON (50% monitor refresh). Presently there is occasional jerkiness at 4k 60 (I have a 3090 with DLSS and set to run at max performance, so GPU is not the bottleneck). Mostly these stutters happen on panning shots. To remove the jerkiness I run at 40 fps (33% of monitor refresh). I prefer the frame consistency at 40 fps than the occasional stutter at 60 fps. But I like the smoothness at 60 fps so was thinking if 5800x3d will help remove these stutters. Basically I am not targeting fps, but frame time consistency,

1 Like

I just went from 5950X to 5800x3d and I’m happy! I won between 15 and 20 fps! Everything is more fluid and the cpu has decreased by an average of 2 ms. 5800x3d more efficient than 5950X for MSFS!

2 Likes

I’ve just upgraded from a 5800X to a 5800X3D and I saw a nice uplift in CPU-demanding scenarios. PMDG 737-800 on the ground at EGLL saw a ~9FPS increase. Interestingly I saw no improvement flying low over Tokyo, in a similar fashion to the OP, which I assume means I was GPU bound in that scenario.

Of course, I can’t say that it is exactly the same as the current MSFS because the versions, etc. are different, but you can see the GPU Power when I did the benchmark.

FPS difference verification between AMD 5950X and 5800X3D at Haneda Airport (RJTT) 34L straight out flight - #26 by KanadeNyan

In the 5950X, typically the GPUPower shows around 280W, which is slightly more than the 350W TGP of the card I have.

In the 5800X3D, the GPUPower is 300W or later, 320W, indicating almost no margin. I can say that it is generally used up.

If you add an add-on that uses more CPU, the CPU will not have enough power to supply data to the GPU and the GPU power consumption will go down. The CPU and GPU were probably well balanced in the configuration at the time.

Very interesting topic

I am running an overclocked 5900x that runs 5.15 Ghz max on one core running a reverb G2 in native resolution (2x 2160x2160) with a 4090

Would a 5800X3D still be an upgrade in my case?

It seems normally the higher the resolution, the less the CPU is the bootleneck

But maybe MSFS is a bit different and I would still benefit by going to the 5800x3d on this resolution?

How much difference there is in any one area depends on each situation, so you may not get a satisfactory answer.

However, the 5900X is 2CCD x 6C12T with the same configuration as the 5950X in my data, and the results will be very similar.

The higher the screen resolution, the less of a bottleneck the CPU becomes, because the relative utilization of the GPU increases, so performance is more likely to be limited by the GPU.

Even so, there is no single part of the scenario that is limited by the CPU. There are many parts, such as additional traffic, that are limited by content that is internally focused on the time it appears, the order in which it is controlled, etc.

It is a viable option because it can be switched on the AM4 platform.

Thanks for your reply!

I Took a chance and swapped the 5900X for the 5800X3D

But not before doing my first little benchmark:

Created a flight in the C 172 from EGLC to EGLL at 10 am on the 1st of december in clear skies and have the autopilot fly.

Captured in CapFrameX for 120 seconds in total of which 60 outside the cockpit by changing views after a minute

Specs:

Asus ROG Strix X570-E Gaming

Kingston SKC 2500 1000 GB Nvme

G Skill 3200 CL16 32 GB DDR 4 Ram (2 x 16)

Geforge 4090 (PNY), undervolted and memory boosted by 1000

HP Reverb G2, 100% scale, no Open XR Toolkit used for these tests

all MSFS settings maxed out using TAA

I did have the 5900X overclocked with PBO running all cores at -30 except the main one at -24 (as per ryzen masters suggestion after running the optimizer)

Then changed the CPU and done the same again

Here is the comparison in CapFrameX and Cinebench R23:

5900X

Average FPS 32.9

1% FPS 24.7

0.2 % FPS 21.1

Cinebench R23 Multi 23002, Single 1617

5800 X3D

Average FPS 41.1

1% FPS 27.1

0.1 FPS 22.7

Cinebench R23 Multi 14847, Single 1460

It feels like the 5800X3D is running smoother, but not by a lot

Needless to say the hit in multicore seems significant

1 Like

Exactly the same on my 5800X via gaming mode in Ryzen Master. Boosts to 5GHz and if anything now runs cooler than stock. For anyone that hasn’t already done this I can only recommend. (5xxx series only)

I swapped out my 5900x with a 5800x3d. There is some improvement in frame time consistency but still not there. I prefer using DLSS3 and cap the frame rate @ 60 fps via VSync in the Nvidia Control Panel, so basically the sim is rendering @ 30 fps which is what the game engine can handle without stuttering and sudden jerks. With 5800x3d overall stuttering has reduced @ 60 fps but sudden jerky movements once in a while still present. My test is 60 fps in New York Discovery flight. With DLSS3 smoothness is perfect I mean 99% perfect. I can live with the latency and occasional artifacting. Latency can be reduced by running the monitor @ 120 Hz and running MSFS with Half VSync. Note DLSS3 artifacting is severe when rendering above 100%.

Edit : I feel all this stuttering is mainly software issue. In one driver update a month ago, DX12 @ 60 fps was nearly perfect. But now DX12 is worse. HAGS is another factor that makes for stuttery experience. Without DLSS3, I found DX11 + HAGS OFF is the best solution for anything above 40 fps (as of today).

I noticed you have MSFS on Optane. What kind of loading times do you get with it? What do you get from Desktop to menu and from menu in to a flight?

My primary focus in this test was the CPU difference.

To minimize as much as possible the difference due to storage, I used Optane, which has the fastest random reads of any storage I have. In other words, I chose it as a means to minimize reads during flight.

It is not the large content reads that take the most time when starting MSFS.

It is to build the virtual file system directory tree, and this part is not very storage transfer rate dependent.

In other words, load times do not vary much just because it is a fast SSD. Perhaps replacing it with a SATA SSD will not make a noticeable difference.

3 Likes

Hi, just wondering, have you done this test with the latest sim update and does turning SMT on still negatively effect performance with sim update 11?

1 Like

I have not tested with SU11, but I believe that it is faster without SMT, for the following reasons, which are (probably) the same as the previous trend.

(I am not claiming that “no SMT is better”. I just want you to understand that there are not many cases where fine-grained parallelization will result in higher speed)

-

Parallelism of MSFS

(Maybe) MSFS is currently not able to use up 8T, while we are flying.

If we are able to use more than 8T, the 5800X3D is 8C16T, so there will be a possibility that HT will be faster.

Until then, 8C8T is (probably) faster. Worst case is also equivalent.

-

processor temperature and boostability in SMT configuration

MSFS is sensitive to single-threaded performance, and the only way for a processor to increase single-threaded performance is to do a core-clock boost.

Current processors perform boosting dynamically, determining the required boost clock and power supply by monitoring the processor temperature; the processor temperature will be hotter with SMT than with the same processing. The processor temperature will be higher with SMT because the core block is not idle and uses more power. This causes the overall chip temperature to rise.

As the temperature rises, the upper limit of boost is reduced.

This is why it is more advantageous to go without SMT. Of course, if there is a mechanism to effectively exhaust heat, it would be equivalent.

-

CPU cache reset by implementation of processor security

Hyperthreading is nowadays difficult to execute speculatively due to security measures, and CPU manufacturers are taking security measures by firmware, but many of the measures are implemented to clear the CPU cache.

If a cache reset occurs while an application is running, performance “may” drop.

If you are not using HT, you could still hit the problem, but less likely.

(If you are interested, a search on CVE-2018-3615, CVE-2018-3620, CVE-2018-3646, etc. will give you an overview. Speedup through speculative execution has long been used in processor technology, and hyperthreading is essentially the main focus of hiding memory access latency through this mechanism. What appears to us as a large number of logic processors is a bonus.)

1 Like

While I agree with all of this it must be said that SMT or HT off does have it’s drawbacks when it comes to high quality recording or multicore processing e.g. video rendering, blender etc. Also I would not consider it for a six core processor e.g. 5600X because then just normal Windows processes are almost certainly going to impact performance and at the most inopportune times when internet and wifi are in use.

1 Like

Yes, of course it is.

As a compromise, UEFI can do SMT mode and do processor affinity only when using applications.

1,3,5,7… etc. by skipping and allocating each thread, it is possible as a function of the operating system to run the specified application as if it were in SMToff, approximately (within the margin of error of SMToff).

For CMD.exe, start /affinity 0x5555 , etc.

For powershell, you can set System.Diagnostics.ProcessStartInfo.ProcessorAffinity().

There are also tools that can set affinity masks, such as Processlasso.

powershell sample

$MSFS=New-Object -TypeName System.Diagnostics.Process

$MSFS.StartInfo=New-Object -TypeName System.Diagnostics.ProcessStartInfo

$MSFS.StartInfo.FileName="FULLPATH to MSFS.exe"

$MSFS.StartInfo.Arguments="FULLPATH to MSFS.ini"

$MSFS.Start()

$MSFS.ProcessorAffinity=0x5555

(However, MSFS will fail the startup check if there is a discrepancy between the number of threads on the processor and the affinity mask at startup, so the affinity mask setting requires waiting from a few seconds to 10 seconds, depending on the environment. Please set this individually for each user.)

At least for the MSFS measurements I made, there was only a margin of error difference between the UEFI allocation and the affinity mask.

By setting these, it is possible to have both applications suitable for multi-threading and pseudo SMToff in games.

P.S. In case of 5800X3D, if 0x5555 is specified for affinity mask, the allocation will skip one thread at a time.

1 Like

I’ve never really looked into this as I always thought it set specific cores rather than disabling 2nd threads (in which case there’d be little benefit and little to no heat savings within those cores)

Thanks for the info and I guess it’s time to give it more study

1 Like

P.S…

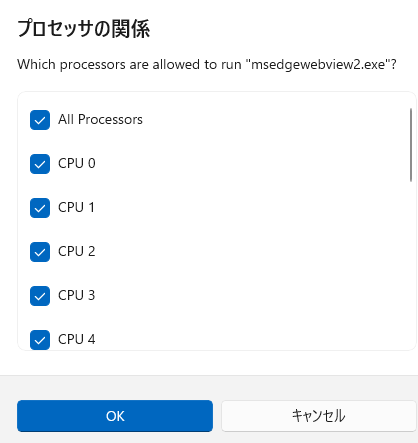

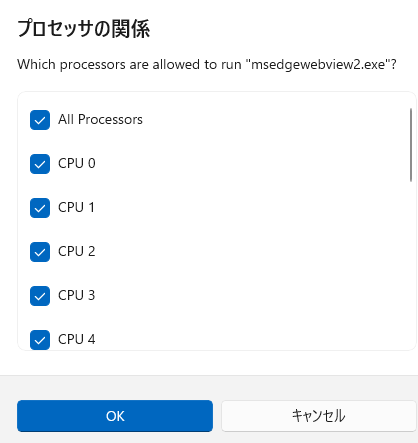

You can also set processor affinity directly to the running application from the Task Manager’s Advanced->Right Click->Affinity Settings.

However, these settings are not saved.

(Therefore, experienced users prefer to create scripts or use other tools.)

Sorry for the Japanese display, I am Japanese. This is the affinity mask setting screen.

1 Like

Now I’m confused as that definitely disables cores rather than threads … I shall have to study further to form an opinion.

The operating system superficially does not distinguish between internal cores and CPU threads, but the order is always from the lowest processor core number.

If hyperthreading is on, the

CPU Core1-Thread1 = Windows Core no1

CPU Core1-Thread2 = Windows Core no2

CPU Core2-Thread1 = Windows Core no3

…

The result will look like this

Therefore, the configuration “disables the core,” but the affinity mask is only a specification to control the assignment of a specific core to the configured application.

The standard OS setting is to allocate to all cores (all threads).

Failure will not break it; settings on Windows are not saved when the application exits. Simply re-launch the application.

1 Like