Well, not all dp2hdmi cables are created equal. Plenty of people here have found that out the hard way.

Also, HDR ignores millions of years of evolution among all animals, well before humans. While our eyes have a wide dynamic range, that dynamic range is limited by lighting. For example, a car shining their high beams at you at night makes it impossible to see anything beside the car - you have to look away. Same as looking into a bright light - which is why over 100 years ago WW1 pilots would fly high, then come out of the sun to attack the planes below them. Pilots looking into the sun to try to see them would be unable to, because the human eye’s dynamic range is situationally dependent.

Now on a small screen running at 1080 or 1440, it really doesn’t matter - there’s not much of an option to “look away.” But on setups like mine, I would be royally cheesed off if the side screens details were nearly invisible because the software decided to dim those areas that are not so bright to increase the “popping out effect” of the brighter areas. This is totally contrary to real life, and with more people running multiple 4k displays, it’s just dumb.

I should be able to look to the sides, and see a normally lit scene, same as real life.

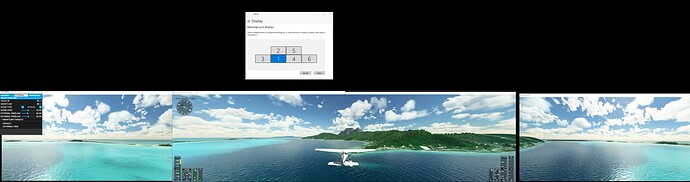

So why waste resources (CPU, GFX, etc.) on an effect that will be irrelevant to most multi-big-screen simmers in the near future? There are already people showing off their 3 x 75" displays. And then there’s cases like mine, where I had to develop the biggest display possible to help low vision users decide what’s best for them.

Think of taking a sheet of plywood, and a second sheet. Cut off 2’ from one end of the second sheet, and stand both sheets on your desk. That’s my display area. HDR deesn’t work properly in such cases - it degrades from the true-to-life experience.

If I wanted HDR, I would enable it in the screens themselves, not MSFS software. The HDR in each screen is calibrated by the manufacturer to give optimal performance for that screen.

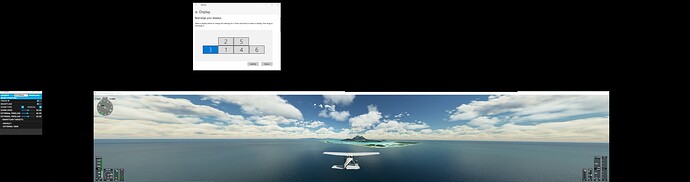

But I don’t because (1) it’s not how vision works in real life, and (2) it’s a waste of resources. Right now I’m running at an effective resolution of 12kx4k (center 8k, sides 4k) - I’ve posted pics of what 16kx2k looks like, and it’s spectacular enough that I’m not going to waste my time on stuff like HDR. Especially since no two companies implement the 10 different standards the same way. I’d rather just enjoy the larger work surface for regular work, and the spectacular views when flying. HDR wouldn’t really add anything to the experience, so why bother?

Now if anyone else wants to post 16k screen shots that prove different, let them. I’ve got no problem with that - except that nobody seems to have that capability right now, so it’s irrelevant.