Here is my current take, I’m trying to reason on how the DLSS 3.0 frame rate upscaling could work in VR, and what kind of latency you would be getting and why this would be very challenging to “make it right”.

DISCLAIMER: I have no insider knowledge of DLSS 3.0, there is no technical documentation available AFAIK, except for the FRUC article I posted above. Nothing I’m writing here is backed by technical data from Nvidia. Everything I write down here is based on a quick analysis after drawing these few diagrams.

First, we start by looking at latency in the most ideal case: app can render at full frame rate (ie: hit the rate of the displays). No frame rate upscaling of any sort is done.

We hit the minimal latency here. At V-sync, we get a predicted display time, from which we can ask the tracking prediction algorithm to give us a camera pose to use for rendering. We have an opportunity just before display to perform spatial reprojection to correct (as much as we can, see my other post about reprojection) the image using the latest tracking infornation that we have.

Note that the timeline for this “Reproj” and actual display time isn’t at scale: scan-out (the beginning of the display on the actual panel) may happen more than 1 frame later, due to transmission latency.

In this example, I give typical WMR end-to-end latency (40ms), which I read is also the range with other headsets. Everything is awesome…

Now we look at a more realistic case: app cannot render at full frame rate. We assume here the app renders at an exact half rate, and that half rate is perfectly in phase with the display’s refresh rate meaning we start rendering a frame exactly at V-sync):

We get the same predicted display time as before, because the platform optimistically assumes that we will hit the full frame rate. But when we don’t (see that “missed latching”, we did not finish rendering the frame on time), we increase our end-to-end latency by 1 frame, In other words, the predicted camera pose that we will use for rendering will be 1 frame “older” that we wish. Whatever additional headset motion happens in that period of time will increase the tracking error. We still do spatial reprojection close to when the image is about to be displayed, but that reprojection might have to correct that 1 more full frame of error, which reduces the smoothness (because spatial reprojection can only do so much, and it’s not very good at correcting depth perspective). But from a latency perspective, 50ms is still acceptable.

Let’s take a look now at your typical motion reprojection. Again, you can read more about it in my other post:

The “forward reprojection” technique means you always start from the most recent image that you have, and you create a new frame from there by propagating motion (moving pixels in the direction you guess they are moving). This scenario does not increase latency. The most recent frame from the app is displayed as soon as possible. A new frame is generated next, and since it is starting from the most recent frame, the end-to-end latency after propagation remains the same. We continue to use late-stage spatial reprojection to further cancel prediction error. From a latency perspective, the situation is identical to the one described previously. The combo motion reprojection + spatial reprojection gives you a smoother experience than spatial reprojection alone (here again see my other post). But it may cost quality (motion reprojection artifacts).

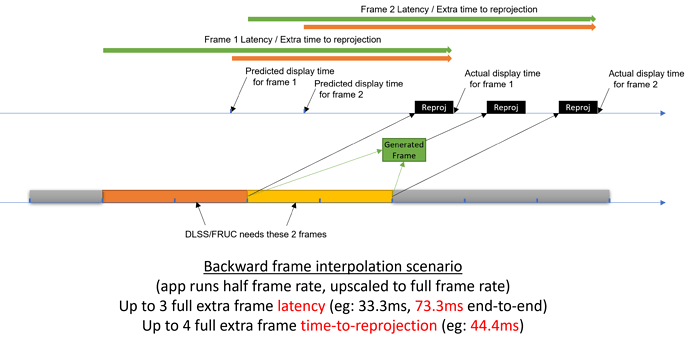

Finally, we look at what “backward frame generation” could mean. This is what DLSS 3.0 seems to be doing, but given the lack of technical documentation out there, this remains a guess.

Because backward frame interpolation needs to have the 2 most recent frames in order to generate a frame to go in-between them, it means we must wait until both frames are fully rendered before beginning the interpolation process. We submit the oldest frame for display in parallel to the interpolation happening. That frame, in the case of the app running at half frame rate, now has 2 extra frames of latency in terms of camera/tracking prediction, on top of the 1 frame latency penalty from the missed latching (see earlier diagram). This takes us to quite a higher latency (eg: from 51.1ms to 73.3ms). We are now beyond the threshold of what is acceptable.. We still rely on the spatial reprojection to attempt to correct the camera pose at the last moment, however due to the extra wait for the 2nd app frame, that late-stage reprojection now needs to correct an error potentially 3x times higher than before (and will do a much less good job than before…). Actually, due to the extra frames being inserted in-between the 1st and 2nd app frames, the 2nd app frame now has a time-to-late-stage-reprojection even higher, in the case above, it is 4 full frames. At 90 Hz that is 44.4ms of possible error. Any acceleration, deceleration, sudden motion in that time period will cause noticeable lag.

So yeah great you are getting higher frame rate. You can see your FPS counter show those crazy numbers (like 90), but your latency doubled. This is assuming you can hit 45 FPS before even enabling DLSS at all. If you can only hit 30 FPS, then take all those numbers and add 2 more frames of latency (eg: from 62.2ms to 95.5ms) and 4 more frames to the reprojection error (88.8ms of prediction error to correct for). This means that every time the game render an image, it does it nearly 1/10th second ahead of the image actually being displayed, without any way to predict with certainty the actual headset position at the time of display.