Documentation

This area is a bit lacking at the moment, as I’ve been focusing on developing the proof-of-concept code.

Here are some useful things:

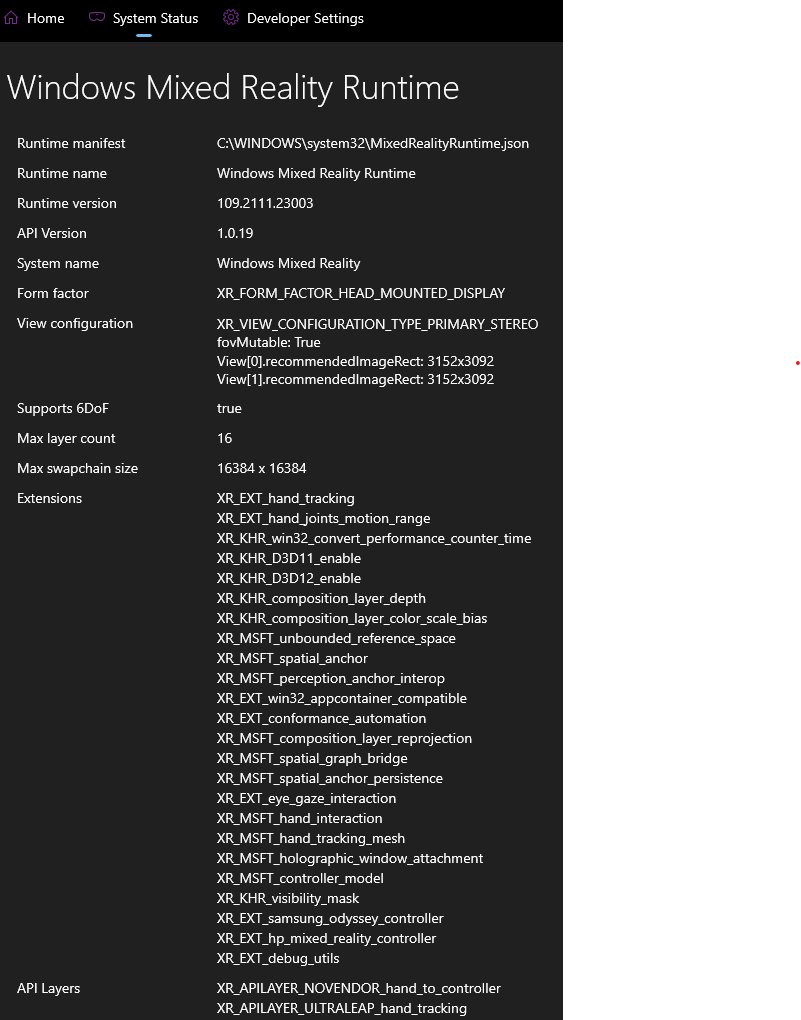

Grip and aim

Some applications use the grip pose and some use aim pose. FS2020 uses the aim pose to calculate the “arrow” or “ray” of pointing. The grip pose is not used as far as I can tell.

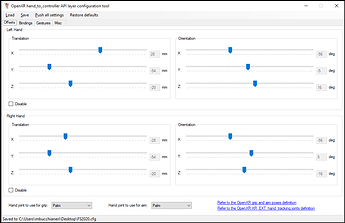

The offset can be modified with a translation and rotation to adjust how the pose of the selected hand joint (for aim or grip) translates to the controller’s pose. You’ll have to experiment with this, but the default value I’ve put so far work pretty well in a “pinch action” model where pinching if the way to grab knobs and press buttons etc…

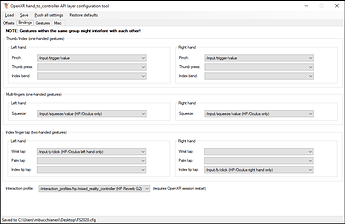

Gestures

-

Pinch: bringing the tip of your thumb and index together.

-

Thumb press: using the thumb to “press” onto the index finger. The target is the “intermediate” joint of the index finger (2nd inflection joint from the tip).

-

Index bend: bending your index in a trigger-like motion.

-

Squeeze: the middle finger, ring finger and little finger bending in a trigger-like motion.

-

Wrist tap: using the tip of the index finger from the opposite hand to press on the wrist.

-

Palm tap: using the tip of the index finger from the opposite hand to press on the center of the palm.

-

Index tip tap: bring the tip of both index fingers together.

As mentioned in the configuration tool - some gestures may interfere with others! For example between a wrist tap and a palm tap, there isn’t a lot of margin. You can use the sensitivity adjustments but ideally, you do not want to have both gestures bound to different actions.

Sensitivity

The gestures described below typically produce an “output value” between 0 and 1 based on the distance between the joints being described.

The “far distance” in the configuration corresponds to the when the value maps to 0. Any distance larger than the far distance will output 0.

The “near distance” in the configuration corresponds to the when the value maps to 1. Any distance smaller than the far distance will output 1.

So when you have a near distance of 10mm and a far distance of 60mm for pinching, this means that when the tip of your thumb and index finger are 60mm or more apart, the control value will read 0 (equivalent to the controller’s trigger being at rest for example). When the tip of your thumb and index finger are 10mm or less apart, the control value will read 1 (equivalent to the controller’s trigger being fully pressed). When the tip of your thumb and index finger are 35mm apart, this control value reads 0.5 (because 35 is half-way between 10 and 60, equivalent to the trigger being pressed half of the way).

Binding action

The action names are currently showing with their OpenXR standard path (eg: /input/trigger/value) but they should still be easily identifiable.

Paths ending with /value typically mean a continuous value between 0 and 1, while paths ending with /click mean a boolean action (pressed or not pressed).

The click threshold option allows to modify the sensitivity of the /click actions.

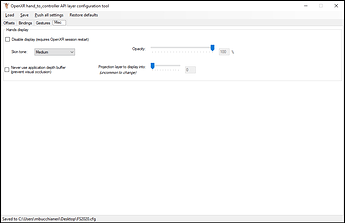

Hands display and skin tone

I’m currently rendering a very basic articulated hand skeleton in game.

Note that MSFS currently does not submit its depth buffer to OpenXR, which means that the hands will still be visible even if they are behind another surface. This is beyond my control. Hopefully the game ending will submit depth in the future.

I am also proud to support diversity & inclusion by allowing to choose from a brighter skin tone to a darker skin tone. I’m happy to add any other tone (like maybe someone wants a Smurf tone that will be blue).