Moderator Note: Some other topics regarding hand tracking were merged into this master. Continuity may be lost.

https://forums.flightsimulator.com/t/please-add-support-for-leap-motion-controller-free-hand-tracking-in-vr/359150

https://forums.flightsimulator.com/t/hand-tracking-support/387903

https://forums.flightsimulator.com/t/support-for-hand-tracking-with-leap-motion/498685

Now that MSFS has VR controller support, we need hand tracking!

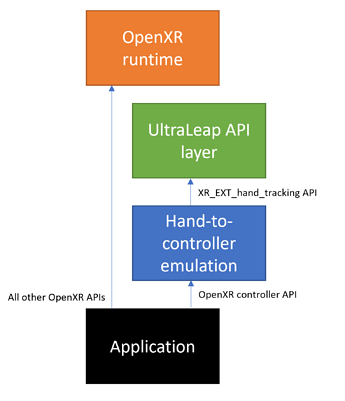

Update: There is a tool being developed for embedding Leap Motion hand tracking through VR controller emulation for OpenXR, please read the topic below. Alpha is available for testing and is showing promising results. There is hope we can get it working in that mode, until Asobo can implement proper native hand tracking.

There is a large segment of MSFS community that would love to get their hands on hand tracking (pun intended), now that Microsoft Flight Simulator has VR controller support. To a degree it’s already possible when using SteamVR with Leap Motion (UltraLeap) controller emulation. However arguably the best mainstream VR HMD for flight simulation is Reverb G2, and it only works reasonably well in MSFS when set to WMR OpenXR runtime (the default). SteamVR OpenXR doesn’t work well at all. Leap Motion only works in SteamVR, besides controller emulation is not perfect. What’s needed is native hand tracking support that works in WMR/OpenXR and SteamVR, i.e. that we would be able to see out hands and fingers in VR and interact with all switches and knobs.

The tracking device is very affordable, under $100. And most of us use hardware yokes and throttles, so even though VR Controllers are much better than mouse, they are still not very convenient. Just using our hands would be so much better for real immersion and convenience.

Asobo, please develop hand tracking support with UltraLeap!

Here is a video of LeapMotion already working in MSFS. Imagine this with direct interaction, hands visible in MSFS:

(video reposted from here)

And here’s new generation of UltraLeap tracking - imagine this working in MSFS - that would be a game changer!

Some people managed to get Leap Motion in native more in FSX! It should be no problem in MSFS, just need a bit of work from Asobo.