I’ve just been experimenting with the Power managment mode in the Nvidia control panel.

Bear with me on this one, it’s a lengthy example but I’d welcome any thoughts on this, and it may be of help to some of you.

Like many suggestions I’ve seen in posts elsewhere on this forum I’ve always had it set to Prefer maximum performance.

I keep a close eye on my CPU & GPU performance (using open hardware monitor software) after trying a bit of tweaking or after driver updates etc…

I usually have my FPS limited to 33fps (more recently at 40fps with last Nvidia driver) in the Nvidia control panel and have Vsync set to fast in there also. This usually sees me running with about 80-85% GPU core usage and temps about 65-75C.

I know a lot of people advocate running GPU at 100% but I don’t agree with that, and prefer to have a little bit of spare capacity for when I go into more demanding areas and it keeps my GPU about 7C lower in temp than 100%.

Today I was flying along over the Swiss alps in my Cessna Caravan and noticed my GPU was pegged at 95-99% core usage. I was up at 11,000 feet with live weather on with lots of clouds. I thought maybe it was due to the amounts of cloud being rendered etc… But when I finally flew into some clear areas with only a few clouds on the horizon it was still showing 95-99%.

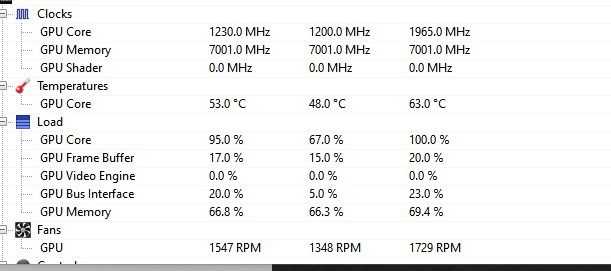

Taking a look at Open hardware monitor I saw this

For those of you not familair with open hardware monitor, the first column is the current value, the 2nd column the minimum and the 3rd column the Max value recorded.

Normally I would see the GPU Core clock speed at 1980 Mhz all the time and the GPU Core % around 80-85% & temps about 70C in that type of scenery.

As you can see it shows the core running at 1230Mhz with 95% and the temps down at 53C.

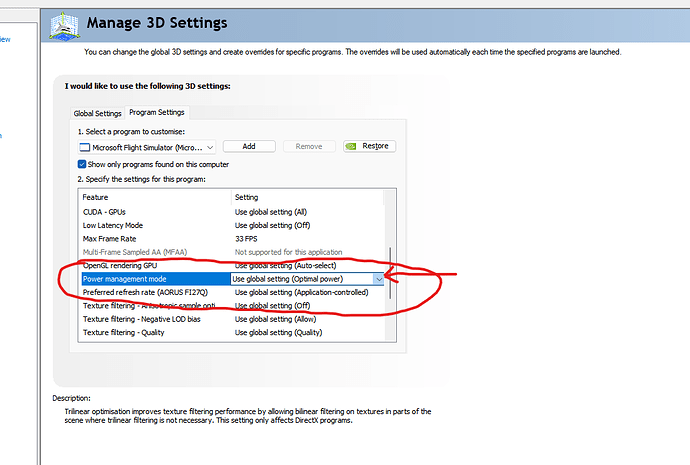

I then realised that after my recent driver update the Nvidia control panel power management mode was set to Optimal power rather than my usual Prefer maximum.

Now I hadn’t noticed any performance drop in MSFS with it set like this, it was still maintaining my locked 40fps (the screenshot was taken when I was using 33fps), so I continued for another 40 mins or so to my landing.

I kept a close watch on the Hardware monitor and realised it was just lowering or raising the core Mhz speed to keep the usage at about 99%. So as I came into my landing area with more AI buildings and airport scenery etc.. it just bumps up my core to 1980Mhz to maintain the 99% core usage.

As a result I get lower GPU temps and less power usage for most of the flight and no less performance fps wise.

I also noticed I had no stuttering on landing/taxiing (I don’t get much anyway but this time it was zero).

So it would seem it’s better to leave it on Optimal performance rather than prefer maximum.

Any thoughts on any downsides to doing this?