Not that I know what I’m talking about but I don’t think so, no. It’s not interpolating anything to do with physics. It’s just inserting an estimated frame based on the ones before it. The physics will always reattach and bring it back at least 30/60 times a SECOND (or whatever) so it won’t break anything in the actual aircraft movement. All you might get is some visual artefacts where the predicted generated frame wasn’t quite a perfect fit but I think that will only happen with large “objects” moving fast and close to the camera. I think!

Input > Simulation > Rendering

There’s no loop back from what gets sent to the render engine which influences the sim.

DLSS 3 frame generation is just looking at the last 2 rendered frames and generating an in-between frame(s) with knowledge of motion vectors.

This adds latency since it’s holding the last frame rendered until it can generate that in-between frame and display it. The added latency is somewhat offset by being able to render more native fps and Nvidia’s Reflex will be enabled with DLSS 3 FG to keep latency low.

Basically it’s like playing the game on a TV with frame interpolation on, only slightly smarter. It’s just smoothing out what was already rendered not speeding up the simulation.

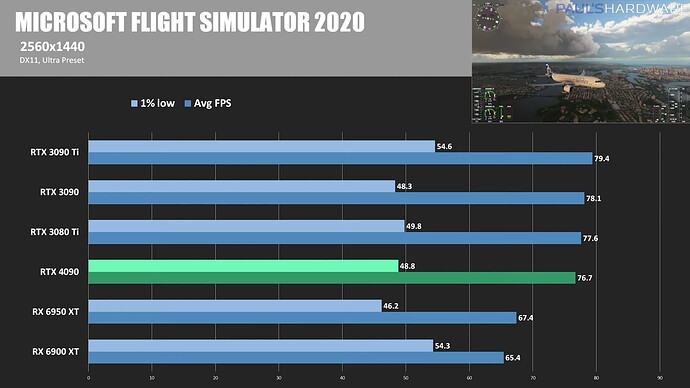

For 4k in 2D it’s a big improvement, even without DLSS3, at 1440p, not so much, as it’s CPU bound. Still, very promising for VR users. This was with a Ryzen 7950X. Mind you, I’m currently getting similar 1440p results with my 5800X3D and a comparatively lowly 6800XT using DX12 with the same plane and ultra preset .

Guess with a 1080p screen and without VR, I won’t have to worry about saving money in the near future ![]()

I would be more interested how the 4090 work’s together with a 5800X3D. I would venture a guess that in MSFS the older 5800X3D will still beat the new 7950X.

Is there an option to keep original resolution or even higher render scaling like TAA and then only using interpolation of frames to lower latency and boost fps? Instead of lowering render resolution and scale up like DLSS 2.0?

Do not know why, but DLSS quality in MSFS is far inferior to other games like Control, Cyberpunk 2077, RDR2… You cannot say much difference in quality in those games with naked eyes when playing. But in MSFS graphics are just blurry and in low quality, even though the motions in sim are much much slower than other action games.

What’s the difference between 60 frames and 200 frames in a simulated flight game? If the quality cannot be improved, only the frame can be improved, then 4090 is just overcapacity!

If the CPU produces 60 updates per second and the GPU has the capability to send to the monitor 120 frames per second, the GPU will only process and send 60 frames per second. Is this an issue? The bottleneck is the CPU because the GPU can process everything the CPU can send to it.

If the CPU can produce 120 updates per second and the GPU can send to the monitor only 60 frames per second, the GPU will only process and send 60 frames per second. Is this an issue? The bottleneck is the GPU.

The issue is that MSFS is very dynamic with a multitude of features.

If the various features in MSFS such as Multiplayer, AI traffic, etc. change the CPU production to rapidly changing 20 to 60 updates per second and the GPU has the capability to send to the monitor 120 frames per second, the GPU will only process and send 20 to 60 frames per second. Is this an issue? It is because every stutter or pause by the CPU will be seen by the user on the monitor.

If the various features in MSFS such as Multiplayer, AI traffic, etc. change the CPU production to 70 to 80 updates per second and the GPU can only send to the monitor 60 frames per second, the GPU will only process and send a maximum 60 frames per second. Is this an issue? Not really because any CPU stutter or pause will not be displayed on the screen as long as the CPU rate doesn’t fall below 60.

These scenarios are simplified but realistic. To solve the issue of the user seeing stuttering and pauses is to limit the GPU to process an artificial low FPS creating a GPU bottleneck. The user will see a mostly smooth 30 FPS as long as the CPU can process frames faster than the GPU can send to the monitor.

If a user installs a 4090 GPU that can provide 70, 80, 90 or more FPS then the CPU should be able to provide 70, 80, 90 or more updates per second.

These kinds of benchmark videos are fake, you see some of these videos uploaded so early they’re essentially breaking the press embargo (which Nvidia takes very harshly). You see these fake benchmark videos all the time whenever you search for benchmarks, they all have the same thumbnail style.

Those numbers are most certainly made up.

Impressive, 77 FPS in 4k with ultra settings.

Now no one can grumble anymore “but the Fenix has 3FPS less than the PMDG I hereby command that Fenix makes 256x256 pixel textures for the interior, and removes the 3D cabin and the cockpit seats, and half of the flight physics, and the animated windshield wipers shalt be removed for FPS optimizing” ![]()

I’m gonna go full out for this GPU. Gonna build it with all the new components to maximize all its performance. There is a price i know, but i really don’t know which 3rd-party RTX 4090 to get? Aorus looks amazing but that size… Or the MSI 4090 looks slick but which one can give you some extra performance?

If i understood digital foundriy’s assesment of DLSS3 correctly, it seems that it will be a good improvement when you already have quite high fps like 50-60 fps without DLSS. As there is a latency hit due to DLSS3, the higher framerate will help make it less noticable. But in case of really low framerate like 20-30 fps and microstutters (which plagues MSFS depending on aircraft, airport and graphics settings), the latency may get really bad even if your fps goes to say 50. So it might be good if the user already have a reasonably good framerate but wants more framerate for whatever reason (VR, head tracking and/or bragging rights). But I am not sure how much will it help the users who want to go from 30fps to 60 fps region. So it may be really good for those fast paced titles like CP2077 to reach 144 - 165 fps in fast monitors. To get the lower framerate region sorted, users will probably still need to get better CPUs first. And I am not sure if dlss 3 will help with stutters at all, since it will need two frames to generate the frame in between, during a stutter that time will also increase, and i doubt dlss3 will not be able smooth that out. But if it can make it less noticeable, that’s a win. Now 4090 may be really good if MSFS implements true ray tracing effects for reflection / shadows / global illumination / ambient occlusion etc, but by the time those are implemented, we may have rtx 50 series ready to go. For me at least, 4090 doesn’t offer much other than the 24gb VRAM, the Achilles heel of my RTX 3080 10GB. For that reason, i may get it if it gets any cheaper later next year. But then the rtx 4080 or a later 4080ti model may make better economic sense.

Get the founders edition if you can. Probably you will get the best cost to performance ratio. And in my opinion looks better than all 3rd party models. And there’s no EVGA this time around. Overclocked cards will really not give you much more performance, and as per initial reviews, the founder edition cooler is pretty good to keep things cool (well if you can call it cool).

Yes according to Gamers Nexus the FE edition has a very good quality cooling solution and is one of the smallest (so it fits more cases), however AFAIK it’s a Best Buy exclusive and I expect them to sell out quick.

If you’re outside the US then I expect it to be near impossible to get the FE. Nvidia is probably sending most 4090 inventory to the US because all the other currencies got crushed vs. the USD, and demand is expected to be low due to much higher GBP/EUR/AUD/JPY prices, Europe especially so this winter.

If the FE is not available then my recommendation is to get the cheapest base US$1600 non-OC model, like the Zotac, Gigabyte Windforce or the Asus TUF. MSFS will remain heavily CPU-limited so GPU workload is likely to be somewhat low (compared to psycho ray tracing on Cyberpunk 2077) and it makes buying a more expensive OC model moot. Save the money for Zen 4 X3D, expected to come out at CES early next year.

I have a 3090 FE so can attest to its build quality. It’s a tank. I would get the FE over any other part, certainly after reading some of the horror stories regarding some vendors cheaping out on the caps.

I hadn’t dug in to this aspect, but I’ve read some talk about the ATX3 specs. As long as you have a relatively recent PSU with enough capacity, an ATX3 PSU is not a necessity. Apparently a 12-pin to 3x 8-pin cable is included as well.

Well, Nvidia has stipulated that would-be RTX 4090 owners should have a power supply of at least 850W. For the RTX 4080 16GB the requirement is 750W, and it’s slightly less for the RTX 4080 12GB variant at 700W.

I had a quick look but didn’t find anything on the Nvidia website to back this up, but I have read the same statement on a couple of different websites now, so there may not be any need to rush out to get an ATX3 PSU straight away, but the writings clearly on the wall that those with older, lower power ratings will need to get a new PSU eventually.

I have an EVGA 1600W PSU, so I expect I will be okay.

I’m running 2xPSU. A Corsair RM850x for the 3090 and peripherals and a RM750x for the 12900KF and motherboard. The 850 has only 3x8-pin connectors, whilst the 750 has 4x. So I’ll probably use the 750 for the 4090 and peripherals, should be enough.

Most certain i do agree but does 3 vents cool better? I had my eyes on the Aorus Master RTX 4090 card but its hard to choose now after reading some posts here.

Yeah, I have a 3080 and would have gotten the Founder’s Edition if I could. I ended up having to get an OC board that uses a lot more power but doesn’t perform much better.

It was frustrating that they basically stopped making the Founder’s Edition fairly quickly. I hope they don’t intend to do that with the 4090 as I want to wait and see what AMD does/etc. I also want to see more DLSS3 comparisons with something like the PMDG. I’ve seen lots of external views of cessnas/etc cruising around, and rendering external scenery is probably what DLSS3 will do best since that’s basically how most games work. My question is what do the glass cockpits look like, and in practice what can you do with the scenery/etc options with the better GPU without killing the CPU running the actual simulation.

This does look like a genuine upgrade over the 3000 series GPUs. I don’t have a huge issue with it being priced accordingly, and since I bought the 3080 inflation alone would be expected to add a few hundred. However, I’m not in a mad rush to replace a 2 year old GPU that is still very good for something that is still relatively unknown. Plus I’d probably want to bump up my PSU.

what shall that be? If you build the wrong system you’ll always end up with bad speeds… I have the RX6900XT and I run the sim at stable 60FPS (VSync lock) in Ultra in 4K. It would go faster but with a lot of tearing. Just with the right CPU and matching RAM etc. 40 FPS is utter BS. And if it’s too slow reduce the rendering resolution a tiny bit, with a good screen that’s not even noticable. That 4090 is as necessary as a Piano in the ocean. Waste of money and ressources.

A nice video and nice to see the performance uplift… But I have an issue with this test. I can load up the default A320 over NYC Ultra settings at 4k and fly it in chase view and get up to 60 fps (not a constant) but I can maintain over 40+ FPS easily with a 3090.

What I want to see, is the 4090 at max settings and the demo be landing at inibuilds KLAX or EGLL, with lots of FSTLT traffic in the Fenix A320 cockpit view, with lots of clouds, because that to me is real world performance! It’s all very well with a default plane, a bit of weather and hardly any AI from Chase view so the glass cockpit is not stressing out the CPU.

That’s what I need to see anyway to convince me.