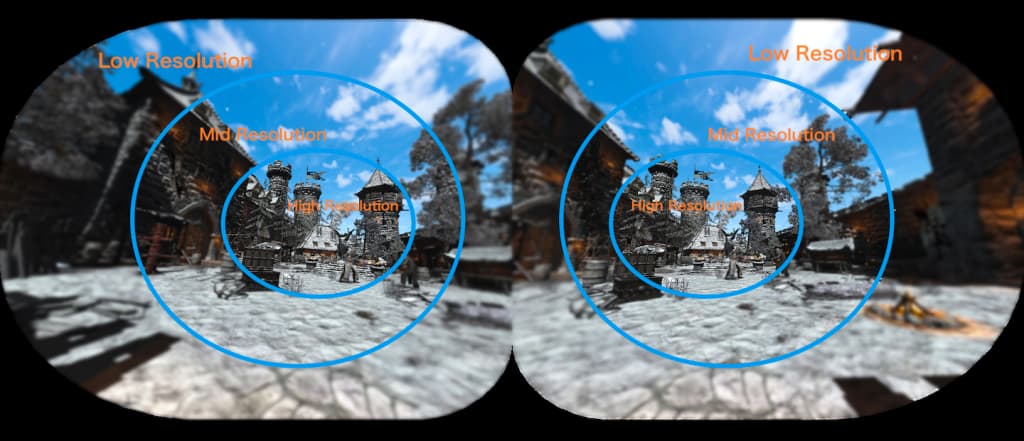

I’ll clarify a bit what FFR is. This picture explains it well:

Source: uploadvr.com

Basically, we throttle down the rendering resolution in parts of the image that are further away from the center (where your eyes are typically looking at). This is because the human eye naturally sees less “clarity” in the periphery, and also because the distortion created by the lenses in your headset is sort of “wasting pixels” near the periphery.

Ideally, you’d use eye tracking to adjust where the highest resolution is, to make it follow your eye gaze (because that periphery region moves along with your eyes). But not many commercial VR headsets have eye tracking, and it turns out the “fixed” center solution is actually pretty ok.

This feature is implemented using a feature of recent GPUs that allow you to specify the “shading rate”, meaning how many pixels are rasterized (drawn) in specific regions of the image. This is called Variable Rate Shading (VRS). The parameters that you can tweak define how big each region (ellipsis) is, and also what shading rate is used in each region. Regions are specified in “tiles” that are 16x16 pixels, and the rate can go from full resolution (meaning render all 256 pixels in the tile individually) to lowest resolution (render the same pixel for a 4x4 group, meaning render only 16 pixels for the entire 16x16 tile, that is 1/16th of the full resolution).

Hope that helps.