good call on the memory. swapped my ram out for new sticks and i can run everything again without any crashing. can OC my CPU as well and dx11 and dx12 both seem stable now - thanks a bunch for that

i have a 4090 and can render clouds on ultra easily along with super sampling resolution to 150%. this still leaves me with GPU headroom as the sim is quite CPU bound due to LOD and terrain cache

This is actually a great eli5 explanation. Thanks for that.

I feel your pain…. ![]()

I’m glad you solved the problem. It just sounded like a RAM issue to me…

I can too, the problem is no FPS, but that somehow it increases stutters by a significant margin. I’ll check again with 7950X3D when I get it. It looks like a possible CPU-related issue to me, not GPU. But who knows…

Just today I tested the option “Turbo mode” with my Oculus Ques 2 and RTX 3070Ti.

I was in fling, when selected it, over Rome in Photogrammetry at 400 Ft with Helicopter Bell 407 (the worse scenario in VR). Usually I hade a quite good smooth experience, but not perfect.

In this scenario I was recive small micro stutters when I turn my head quickly from right to left or when I was turn my Helicopter in circle.

After selected the turbo mode all is changed…

No more micro stutters all super smooth, I even crank up from mid to high many ingame setting and

still fluid experience. If I turn quickly my head nothing happen.

Now I feel like to have a 4090Ti instead a 3070Ti.

How is possible???

Magic!!! ![]()

Don’t belive to the magic! ![]()

But I am so surprised.

If you are already CPU bound (which you are most likely - even I am with a 13900K) the clouds on Ultra make things worse. They do increase the CPU not just GPU AFAIK.

Exactly. That’s what I was commenting on. 4090 gets everyone CPU-bound almost all the time. And there’s a misconception that only Terrain LOD is CPU-intensive setting, the rest are affecting Mostly GPU. But Ultra coulds is definitely affecting CPU enough to introduce stutters. That’s why 4090 doesn’t help here.

Yes, one thing to keep in mind… clouds aren’t just heavy -static- volumetric renders in the scene. The clouds are part of the weather system, driven by a CPU physics thread. The clouds change in volume, and they move. This is most likely tied to CPU workload. And of course, the more you add, the higher the workload. (IE Ultra vs Medium) It’s just another dynamic component/variable in the simulator that can suddenly have an impact on your performance when you were otherwise running just peachy. It’s something to keep in mind when you are tweaking your settings.

With OXRTK I get odd flashes in left or right eyes. They look like a single blank white frame is sent to a eye. This only happens if I use sharpening and/or color correction, generally I would use CAS and sometimes color. This does not happen if I only use FFR.

Running with Index HMD in Steams openXR on a 9900K 32gm 4090 system.

Edit: Just remembered this was with dx12 not sure about dx11 as I haven’t used that for a while

Which CPU have you got?

The other day testing with clouds in ULTRA and micro stutters unlike in medium-high and observing the GPU only around 90% with spare room, I observed too the 12900k CPU and half of p-cores threads were in use relatively high as always, though nothing at 100% fixed in usage.

Deleted.

Taken to direct message, which is probably more appropriate…

So which CPU on the market today would handle this (in stock condition / no overclocking, etc.)

Sorry if this is off-topic!

When dealing with wireless streaming, the bitrate will be a limiting factor for visual quality. The link speed between your computer and your headset isn’t fast enough to send the full resolution of your headset, so it has to use lossy compression to get around it.

I haven’t used VD, but the settings you mention are likely all to do with what bitrate is being used.

Any hopes and news in DLSS 3 and VR?

None no. All the threads I started died off. Nobody seems to care, and I don’t have enough bandwidth to drive this alone.

IMO you are better off with motion reprojection anyway.

What a pity, maybe in the future something changes and VR can take advantage of that DLSS 3 dedicated hardware in a special DLSS for VR or for to manage better the Motion Reprojection. Really thanks for your efforts.

Though I have my queries, how is possible that my ancient tv of almost 15 years with a ridiculous unpowerful CPU compared with hardware nowadays and even hardware of 15 years ago, could manage so well their “DLSS 3” and “MR”, motion judder canceller is called in Samsung the option to smooth the images, I think remember.

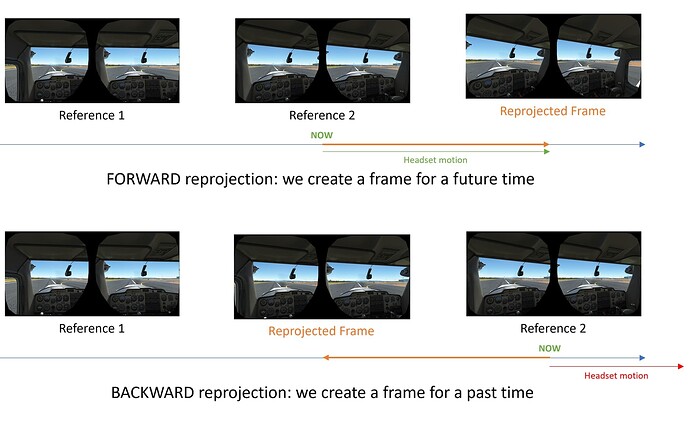

Don’t quite get the relevance of TV smoothing here. TV content is fixed camera. You are always moving the camera in VR. This is what makes MR difficult. This is what makes DLSSG not usable as-is.

DLSSG is backward interpolation. This technique is highly incompatible with VR due to latency.

(from my other post about reprojection: Motion Reprojection explained - General Discussion & Interests / Virtual Reality (VR) - Microsoft Flight Simulator Forums)

(and here a reference to the latency of DLSSG: Will DLSS 3.0 be supported in VR msfs? - General Discussion & Interests / Virtual Reality (VR) - Microsoft Flight Simulator Forums)