Well, what I see in your results that you are able to get “zero stutters” and the only think really different about your setup is the 5800X3D CPU. I fly with TLOD 100, and still have stutters over Manhattan. I do see a very significant improvement over 3080, but there’s more gap than from 3090ti of course. Most importantly, even 60fps is not enough for me without MR as I can’t stand the flicker. And with MR there’s a huge hill that 4090 got me over: stable 30fps MR in native G2 Resolution. It is a big deal. It was never possible for me to run flyable native resolution with any settings on 3080, period. I had to use FSR 80% and still it was very challenging. I do complain of stuttering now, but it’s still leaps and bounds better than before, as there were stutters and low FPS with MR failing resulting in a jerky warping mess. So 4090 is definitely worth it for me, but it’s very clear based on both mine and your results that a CPU upgrade is in order. For me 5800X3D would be a downgrade in productivity, so the only option is to wait for 7800X3D to be released, hopefully in a reasonable timeframe…

What’s the issue with the 4090 and Aero?

Since the cpu performance is so critical with the 4090 (and even us poor 3080ti folks) please can we all state the boost or overclock speed that you use - for example 5800x3d @ 5.2Ghz.

I hope Mr. Fantastic mbucchia is considering changing/adding to the OXRTK Hud the full cpu timing instead of the current appCPU which just has to do with rendering and doesn’t include the sim app compute time which shows as main thread time. Seems as always to be a critical balance

Ryzen 8 5900X overclocked through Curve Optimizer, boosting to 4.85GHz single core C23, 4.4GHz all-core C23. Doesn’t want to boost higher than that, desite liquid-cooled 360 AIO makes it never exceed 72C. I also tried overclocking with Hydra - same result. I think 4.85 is actually good for this CPU.

Really bad constant flickering. Visible in all applications, not just MSFS.

Lots of chatter on the Varjo discord about it. Varjo engineers think they might have spotted what the issue is “a change in flip behaviour with the new generation of GPUs” which I believe is in reference to flip queues and frame synching. Working on a fix now so fingers crossed.

That said, being forced back into the G2 has reminded me about all the things I prefer about it: much lighter/more comfortable, not having to use ear buds, plug and play (no mucking around trying to convince base stations to see the Aero) - and I didn’t even find myself think the image quality was much of a downgrade. Don’t get me wrong, the Aero is better, but definitely not 5 times better. I had got used to not having to mop condensation off the lenses though - that I do not miss about the G2!

Oh that does sound annoying. Hope they fix it soon! Yeah the G2 is still great

My 5800X3D is stock.

In another thread Matthieu mentioned that additional CPU frame time measures are going to be included in the next release of OXRTK

With SU10 reinstalled I whacked every setting to the max, apart from TLOD and OLOD which I left at 200/150, and re-ran test 1. Performance pretty similar: 44FPS on the runway and 30-41FPS over downtown. Dropping to TLOD100 didn’t yield any significant FPS increase this time so with everything maxed out I’m probably now reaching the GPU limit. Unfortunately some stutters are now back.

Well, trying to squeeze every bit I still have in my 5900X until 7900X3D is available, I revisited my overclocking and I was able to make it boost to 4.625GHz all cores and 5.0GHz (!) in singe core load, via Auto OC and some undervolting, however the actual Cinebench R23 scores are lower than my manual overclock with Curve Optimizer ![]() So, not sure what’s going on…

So, not sure what’s going on…

I was looking at the Aero, my conclusion was the same, i’m sticking with the G2 for now.

I settled down on best multicore and single core scores vs the CPU boost ratio, and at multicore loads my 5900X now boosts to 4.625 GHz, and in single-core loads - up to 4.9 Ghz. Manual Curve optimizer, Auto OC with power limits set to “Motherboard”.

try to switch between dlss and taa. and then end with dlls in performance. I have a bug there blocking my fps.

Whoa, does it really block an M.2 slot? Is it blocking the first slot or second?

Keep in mind the 1st,M2_1 (0th) slot is usually (awlays?) the faster one as it communicates directly wit the CPU whereas the others go through the chipset. Still a thing on X670 chipset?

I believe this shows up in raw SSD benchmarks (I haven’t tested in aages) but it shouldn’t have any real world impact. Maybe with DirectStorage in future stuff, probably not there either.

It doesn’t block an on-board 2nd M2 slot, but I have 3 SSD drives, so I had a PCIe expansion board with M2 Slot. As 4090 is a true 4 slot monster, it blocked a second full-size PCIe slot. Fortunately my older SSD is M2 SATA and not M2 NVMe drive, so I didn’t lose any speed from connecting it through SATA M2 adapter. I did benchmarks and I’m good. So I freed the second M2 slot and moved the MSFS drive into it.

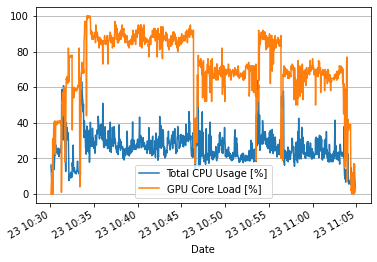

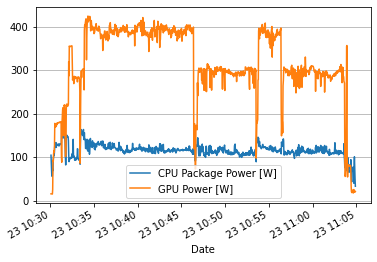

That Gpu load readings give no sense to me. I do similar test over and down in manhattan (discovery flight for defined conditions) and earn as you up to 49fps at 2700 ft and low 30fps rowdy flying between the architecture. But my gpu was loaded around 65-70% in this settings. So are you prove you track the right value?

My cpu is 11900k, able to unleash 3/4 of the 4090‘s force.

Beside this I hope you have time to play between all your upgrades ![]() .

.

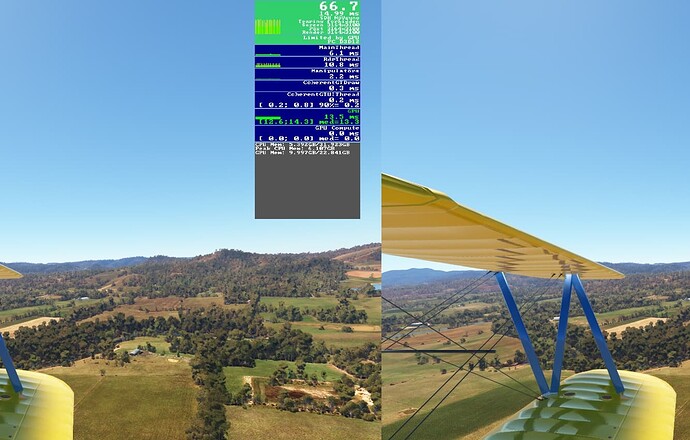

Same CPU as yours, very similar observations. The following is from a Top Rudder 103SOLO flying in-between buildings in Manhattan in the morning under Few Clouds weather setting. All graphics settings maxed except TAA100, TLOD100, OLOD100 and Cockpit Refresh Rate Low. With MR off I get 90% GPU utilization and 45FPS; with MR on it’s 70% GPU and 30FPS.

If someone is CPU bound with a Reverb G2, they are probably doing something wrong and losing out in visuals.

Is it all core usage? If so, that’s meaningless. Must likely if you look at the most loaded core it’s going to be close to 100% loaded. What are you measuring it with?

I’m fairly sure that I’m CPU bound with 4090 and G2, it’s pretty clear from the grandsons in OXR devtool. Still I’m not sure i should max out all settings too, because it feels like at times i get fps dips from that even with 4090.

No, the Windows perf mon is a bit confusing. I was looking at the 3D load but it would appear that the ‘graphics_1 measure was running a lot higher. At the end of the day, frame times are the best indicator of whether you’re cpu or GPU bound. If I max out all of the settings I can still get the GPU to bottleneck in some scenarios. I don’t really want to fiddle with settings mid flight to balance GPU/cpu depending on surroundings so I’m just trying to find that sweet spot that works for every plane everywhere.

I don’t trust those timing statistics. The OXR devltool was telling me I was CPU bound when I had the 3080. My eyes don’t lie. For me the 4090 was even a better purchase than the 3080.

My Asus Tuf OC RTX 4090 finally got delivered, considering I ordered it five minutes after the they became available to purchase. Last time I forget to ask for Express Post, duh.

Anyhoo, it performs very well indeed. I haven’t been able to do much testing in VR yet as I still waiting on a version 2 cable for my G2 to arrive. I had the G2 plugged into the USB C connector on the Radeon 6800XT, which gave no problems on my AMD x570 motherboard. Sadly without that I can only get one USB port to power up the headset, and even then there’s no sound and it keeps going black, to display my non existent volume setting. It may work with the USB hub of my 43" 4K monitor when that arrives tomorrow. Anyway the version 2 cable should work fine.

The performance in VR, what little I tried, gave me near twice what I was getting with the same settings with the 6800XT. High sixties to mid 70 fps in the Stearman in a rural setting. Even without MR, which I didn’t try, it was so much smoother and really sharp, that I may not bother with it. More info when I get the headset to work properly.

OXR100%, TAA100%, TLOD 200, OLOD 100

DX12, plus I forgot to disable HAGS, as I used it for testing DLSS3 in 2D

Mix of high and medium settings. No DLSS or Toolkit.

Ryzen 5800X3D, which gave very low Main Thread timings.

Asus Tuf OC 4090 (which is huge, looks cool and it all fits in the case. I could easily close the glass panel).

Lousy photo, but at least the adaptor cable fitted without the need to squish them up too much).