Here is an early video of the melted buildings phenomenon: https://youtu.be/FTAMQUNypuo?t=279 (@4:40).

Overall the building quality in that video is pretty good. It seems to me that this issue always existed, but has gotten much worse for some reason.

I never personally noticed it before the Japan upgrade. It’s possible it was there, only less prevalent.

What I noticed in the Japan upgrade is that these melted buildings were appearing in areas where I was flying regularly and hadn’t seen it before. Initially, I could see it in distant buildings. As I got within a few miles, they would fix themselves. With each subsequent update, that distance got shorter and shorter. As of 2 updates ago, I was able to fly over areas for several minutes at 1000 ft and slowly see stuff pop up from the melted wrecks. As of last update, it’s hit or miss if they’ll ever fix themselves. And even if they do, there always seems to be a bunch of melted buildings and trees left behind.

Not just possible, it’s certain it was there. Look at the video I posted, which is from september 4.

The first time I personally noticed it, it was all over the place on Daytona Beach, Florida. I haven’t seen early videos that show it all over the place, only in isolated areas like in the video above.

Cool. Not that I was doubting you. You have video evidence from Sept 4 and that’s good enough for me. I didn’t check because video steaming is blocked on this network (I’m at work on lunch break). Either I wasn’t affected by it at the time or wasn’t noticing it due to the WOW factor of the graphics at close range. I first noticed it after the Japan update. I remember it was quite jarring when I first noticed it. And it’s gotten worse since.

Gotta love that new car smell, until it wears off. I think there is a lot of that going on. There are numerous posts about people noticing a degrading of the graphics and the evidence just doesn’t support that.

Our perception of the graphics have changed, and as we grow more familiar with them, we will see the imperfections even more. It happens all the time. with everything that’s new that later becomes familiar.

I have no issue with the quality. The thing that bugs me is how small the draw distance is for it to load. Autogen area’s load out way beyond that of photogrammetry & I get it’s for performance but it would be nice to have the option to load photogrammetry much further out for those that can or will be able to in future as right now it looks pretty bad a very short distance out.

Yeah, I still haven’t figured out LOD for autogen vs Photogrammetry. It’s not the same. I bet that Photogrammetry actually is controlled primarily by the terrain slider, and acts more like terrain because it is a mesh. Photogrammetry acts almost like DEM in the way it renders more detail as you get closer. Where as autogen simply pops in at it’s LOD radius and really doesn’t change much, except in applying the textures to the objects as you approach.

I’m going to try keeping my object LOD at 100 and then up the terrain LOD and see if that makes a difference in photogrammetry.

You’re misunderstanding me. I’m suggesting that the developers employ a similar image processing “AI” technique like they’re currently using to identify tree and building locations in non-photogrammetric areas, and they apply that similar technique to the photogrammetry data itself.

So trees for example: The “AI” would be used to find vegetation in the continuous mass of photogrammetry geometry. It wouldn’t rely on existing data. If a collection of vertices (or the image texture mapped to those vertices) in the 3D photogrammetry mesh meets certain thresholds for “greenness”, “blobbiness”, and “height above local ground” then it could identify a 3D region as a “tree location”.

Sure you can. Once a tree is identified in the photogrammetry geometry using any number of techniques (live the above color, shape, etc), you could do any number of things with the identified vertices in the photogrammetry mesh. You could flatten them relative to a local ground plane. You could cull them entirely and patch the hole. Then you stick an autogen tree over the same location.

Nope, it would be pre-processed server side. The identified vegetation locations could then be handled locally, the GPU culling the identified photogrammetry verts and inserting a locally stored autogen model, but the client side CPU wouldn’t be used to find photogrammetry trees, or even have to process the photogrammetry geometry really other than loading it.

So here’s how it might work: Blackshark, Asobo, or whoever runs a pass on the Bing photogrammetry data in a region and identifies the locations of trees. These locations are sent to the client simulator, whether they’re streamed or downloaded as base scenery in the next update isn’t important here. Then the client side sim can use these locations to modify the streamed photogrammetry mesh as it gets it. There are different ways the tree locations could be formatted: indexed verts in the photogrammetry mesh, or an exclusion box that is culling the photogrammetry geometry. But, whichever format, there are then methods like using geometry shaders on the GPU to make modifying this mesh fast and efficient. You’re either tweaking the heights of verts or not drawing them, and then drawing an autogen tree. The performance hit should be negligible compared to the rest of the autogen.

Here’s a photogrammetry tree:

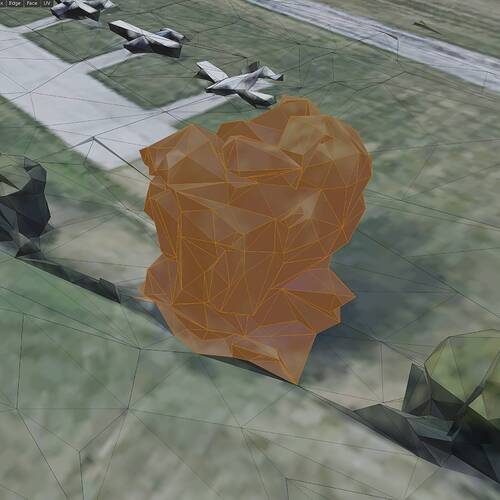

Because the vertices in the mesh are green, and contiguous in a blob above the other nearby vertices identified as “ground”, the “AI” precomputed this as a tree, and sent this bound volume to the client Flight Simulator:

The GPU on the client’s machine now has a nice way of identifying the verts in that tree:

The GPU can then just remove the photogrammetry tree by culling these verts at render time:

The GPU could then patch that hole, and insert an autogen tree that looks better in it’s place, or do whatever other fix.

It’s not that Asobo can’t improve the photogrammetry. I’m making suggestions using extensions of capabilities they’ve already demonstrated. You could apply similar techniques to replace, fix, or add detail to other types of photogrammetry objects: boats, houses, radio towers…

Or be a data supplier for a competitor simulator. My point here wasn’t that Flight Simulator isn’t good and needs to be replaced, or that Google is necessarily the one to do it. But that competition is a good thing and will benefit us.

We do have an incredible sim, and I enjoy the parts that work well. It’s also an early-access/alpha state sim that was rushed to market in a half baked state. Major features that were advertised are not implemented. Other major features are works in progress with a long ways to go. And yet other parts of this incredible sim are frankly quite shoddy. We could be fanboys and defend this game from any criticism, or we could have constructive conversations on how it could be improved. I’m happy with what I have here, but I’m not going to be happy if this is all we have. This game needs a lot of work to bring it to the state that Microsoft and Asobo had pitched it as. It’s clear they’re going to spend the next several years working toward those goals. My hope is that we’re helping make contributions to the design process with these discussions. Not simply knocking the game.

My photogrammetry is awful! It looks partially loaded and melted. It also doesn’t load things further away. My friend is playing on a lower end pc than me, he plays in 1080p and I play at 4k. His loads correctly.

my internet is faster at 80 mgps. Only thing I am wondering is either:

- is an upload internet speed of around 5 mbps too slow?

- can my gtx 1060 and i5 3470 not render the scenery at 4k fast enough?

A GTX1060 at 4K is asking for the impossible. Even a RTX2070 would struggle in some circumstances.

It does work with render scaling on 60

You would probably be better off at 1080P and 100%.

I’ve tried that and its un-playable

If you run 4K with render scale of 50, it renders at 1080p and upscales. 60 is definitely acceptable too. That’s actually the correct way of doing it if you’re using a 4K monitor.

The problem with doing that is that every pixel rendered in game becomes 4 pixels on the screen on a 4K panel. That causes jaggies and makes text very difficult to read. It’s much better to do the other way around as described above.

It’s always best to run a monitor at native resolution for the best image quality. In games that don’t offer render scaling, that causes issues for the aforementioned reasons, especially if trying to run at resolutions that aren’t multiples of 2 (like running 2560 x 1440 on a 4K screen).

I am surprised. The pixel density on a 4K screen of an equivalent size to a 1080P, say 24 inch is much higher so I would have thought the end result would be pretty much the same in terms of image quality. One lives and learns.

4K is 2x horizontal, and 2x vertical resolution of 1080p. To run 1920 x 1080 on said monitor, each 1080p pixel becomes 4 pixels on the 4K screen. It’s simple math, really. Pixel density is higher, but on the render side (in game) it’s not anti-aliasing the same way to get rid of those jaggies.

When using 4K native and using 50% render scale, that upscaling is done in software, including the antialiasing, and the output still gives you a 1:1 pixel. That makes everything sharper, even though it’s rendering at 1080p.

In terms of performance though, it shouldn’t make a difference (theoretically speaking) whether you run 4K with 50% scaling or 1080p natively. I can’t say for sure as I haven’t tested it, but in theory it shouldn’t, as you’re rendering in 1080 in either case. However, in terms of visual quality, it will make a difference.

I was aware of the importance of driving a monitor at its native resolution but in his case he’s got a 4K monitor being driven by a card that is at best suited for 1080P on complex games like FS. Sounds like he really should get a better card when budget will allow for it.

Indeed. Not to mention his CPU is under the minimum requirement. i5 3470 is 7 generations old. It’s most definitely a CPU thing IMHO. The GTX 1660 should be able to run 4K at 50% scale render with a mix of medium and high settings with a fast enough CPU.

My son runs a GTX 1070, which is roughly the same GPU power (give or take) as the 1660, and he has a mix of high / medium and it runs great (30+ fps most times, 20+ in busy areas) and photogrammetry works as good as can be expected currently (same as it does on higher end cards, which is still kind of poor at times). The difference is he’s got a Ryzen 3800X that feeds it.

I suspect severe CPU bottlenecking with an i5 3470.

Its a GTX 1060 I think he said, not a 1660.