I love the new 2024 Sim. yes it has bugs, all games do. We live in a world that is in “constant improvement”.

DX12, from what I’ve read it supposed to the CPU and GPU at access VRAM directly and more efficiently handle resources to improve performance.

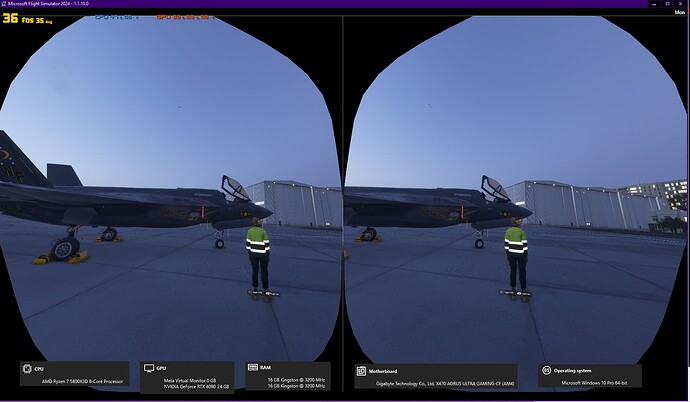

I run 5800X3D and 4090 in VR Quest 3.

In VR you’d expect your GPU to run at 97% and use as much VRAM as possible and in 2020 that was the case.

In 2024 I’m seeing my 4090 barely breaking a sweat. Ultra or Not it rarely seems to use it’s full potential. Likewise, the CPU with the new Multithreaded nature of 2024 architecture, doesn’t seem to max out all it’s cores, and still gets hung up on the Main Thread in NYC etc.

Striking a balance between GPU limited and CPU limited scenarios and settings, I seem to see optimization issues with DX12 in 2024. My understand, though very limited, is DX12 leave the DEVs to figure out the optimization. In the past it was driver level optimizations DEVs didn’t really have to worry about. But DX12 has shifted that load onto the game developer.

I’d like people who know this stuff to chim in on DX12 optimization. Is Asobo understaffed and dropping the ball on DX12 optimization and leaving performance on the table with this new architcture?

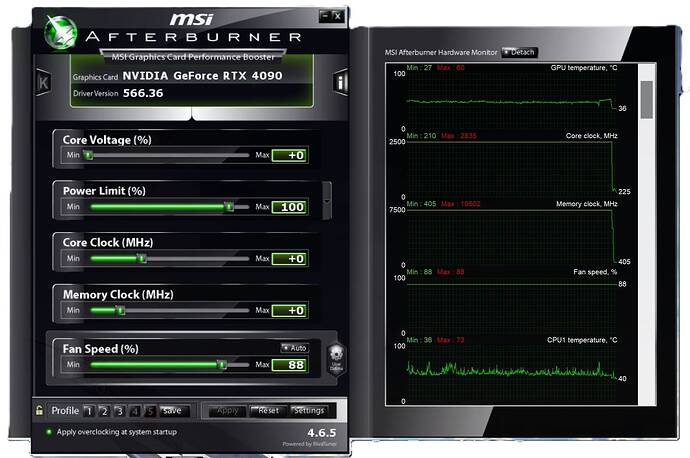

I also use DX12 and have an Intel 10980XE CPU (4.7GHZ overclock) and an RTX 4090 liquid cooled MSI Suprim graphic card.

I could not even start the sim until I found a workaround but have been using the simulator and trying many different configurations with my CPU. My graphics card is constantly running at full speed regardless of setting to normal or performance in Nvidia app. My graphics card runs very cool but as I said remains at full clocks at all time while the sim is running.

My CPU has 18 cores and 36 threads and I initially thought the sim wouldn’t start because of a high number of cores. Now that I can start the sim no problem I see that the simulator is using all the cores and threads with my CPU set to hyperthreading on.

I am also getting great gameplay with no stutters and amazing graphics while using HDR on a 43 inch Asus ROG Swift monitor.

I’ll try and get a screenshot showing the full clock speed on my GPU.

Same CPU, same GPU, same VR Device. GPU utilization is around 90% in VR here. Like 85-89 % when parked on the ground - indicated by FPSMonitor. Wrong sensor? ![]()

I also have the Quest 3 and only tried it once but I am looking forward to some crisp and clear VR. I am just trying to find my best settings right now until the patch arrives.

Here is a screenshot of my basic CPU/GPU temps and usage. My RTX 4090 is at full clocks all the time in game with preference set to normal in Nvidia app. Plus a couple more screenshots enjoying the Glacier park mountains. I wouldn’t get that close in real life but it looks so nice in MSFS 2024.

don’t get me wrong, I can play with very high settings at 40fps in Quest 3, but very often I’m not close to VRAM capacity of 24GB on 4090. it’ll settle at 7-12 -15GB etc… Also running 64GB of RAM and rarely see much above 22 GB of RAM. so I have these resources and they seem underutilized.

Yes but that is very normal. RTX 4090 has a lot of VRAM headroom still. The second red number top-left in my picture shows VRAM Utilization at 53% - I did set max resolution in the Oculus App. I would wish for sharper terrain textures at mid and long range, but it doesnt seem possible or gets limited elsewhere. Your VRAM and RAM usage is very normal for the game. It doesnt go higher as far as I can tell.. only when you have a lot of other stuff open and/or while streaming.

What happens if you keep upping resolution? Doesn’t that increase GPU load?

RTX 4080 & 9800X3D CPU here. 64GB DDR6000 CL30

Resolution 3840x1600

Ultra @ 400 terrain LOD, 200 LOD object

With DLAA, getting 40-60fps (I was getting 90-100 in 2020 with a 5800X3D)

CPU never goes above 60% with ultra settings.

GPU stays around 80% once in sim/flight.

Sometimes the sim gets SUPER chuggy and FS2024 is only using 25-35% CPU is - but nothing else was going on.

Why does your GPU or CPU have to be completely utilized all of the time? In a perfect world this would be the case, but MSFS is different. Some settings are CPU based, while others utilize your GPU more. It’s a balancing act.

In my situation, the load is balanced. Not fully utilized, but just balanced between the two. It flips between CPU limited and GPU limited a lot. My framerate is stable, albeit low compared to newer systems.

Because why isn’t it running faster? Clearly there’s headroom.

CPU & GPU are rarely “synced” - something should be the bottleneck.

If it’s not CPU or GPU, what’s the bottlneck?

I have gigabit internet on a Samsung 990 Pro.

If it’s not CPU or GPU, what’s the bottlneck?

Whenever I had discussions like this in the FSX days, no one ever mentioned the API was the bottleneck. You can only send so many draw calls to your GPU at one time. Everything has a limit. You might be hitting that limit.

Look at this: As the number of draw calls goes up, the GPU usage goes down.

https://www.youtube-nocookie.com/embed/TV864UUnF2w

My 4090 is being used a LOT more in 2024 than it was in 2020. I thought the general consensus was that '24 is using the gpu a lot more – this is the first I’ve heard of someone saying there 4090 is not being used as much.

As the old lady said in the commercial, “That’s not how this works. That’s not how any of this works.”

I’m assuming some here haven’t seen the FPS- VRAM bug on the bug forum?

I agree “constant improvement” in tech.

Your 5800X3D doesn’t have as high a boost clock as other cpus.

There’s a chance that the dominant thread is limited by boost speed, and how often the cpu can maintain boost speed.

I see better performance on my quad core Xeon and Gtx1080ti with 2024, so yes Asobo have added some DX12 magic. Smoother but defo cpu bound.

Anecdotal evidence from X3D users is that they don’t quite get the same benifit as 2020 did.

The higher clocked Ryzens, above 5GHz, tend to have a 64MB L3 cache. Perhaps that is the new sweet spot?

So my upgrade shopping list is targeting 5GHz.

On Discord there is chatter of future Nvidia devices having a decompress unit to bypass the cpu further. Time will tell.

Can you link it?

all the DX12 talk in the Dev circles are such that optimization is up to them. Giving CPU access to VRAM directly is a complicated thing and it is reasonable to say that not all game devs have 100% handle on this.

Sure there are times when my 4090 is running at 97-99% but very often it’s not and I don’t see an increase in FPS with that “Available” headroom like I thought we’d see. as for the 5800X3D in VR, the load falls on the GPU and it does its thing well. the V-cache might be out done by simply more cores in 2024 vs the main thread issue on 1-2 cores benefiting from V cache.

It seems to me the Dynamic Settings really affect behavior in a way that it’s hard to see what is going on.

I will be playing around with Dynamic Settings off to see where this utilization really sits. Dynamic Settings seems like the “rug pull” of 2024. could be good, could be bad. too aggressive change, too slow change etc.. therefore affecting utilization.

The same reason my truck doesnt go faster when i fill the 40 gallon gas tank.